IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

FINAL

Submitted May 4, 2017

Version 1.3

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page i

Contents

1 Background ........................................................................................................................................... 1

2 Approach ............................................................................................................................................... 1

2.1 Test Environment .......................................................................................................................... 2

2.2 Configuration ................................................................................................................................ 2

2.3 Rounds .......................................................................................................................................... 2

2.4 External Factors ............................................................................................................................ 2

3 Terms and Definitions ........................................................................................................................... 3

3.1 Supplied Tags ................................................................................................................................ 3

3.2 Capture Zone ................................................................................................................................. 3

3.3 Tag Memory .................................................................................................................................. 3

3.4 Test Parameters ............................................................................................................................ 4

3.5 Reader Configuration .................................................................................................................... 6

3.6 Reader Interface ........................................................................................................................... 7

3.7 Application Program ..................................................................................................................... 7

3.8 Speed Tolerance ............................................................................................................................ 7

4 Equipment and System Test Requirements and Plan ........................................................................... 7

4.1 Introduction .................................................................................................................................. 7

4.2 Test Schedule ................................................................................................................................ 8

4.3 Pre-Testing Setup and Assumptions ............................................................................................. 8

4.4 Test Rounds and Features to be Tested ...................................................................................... 10

4.5 Transponders .............................................................................................................................. 17

4.6 Vehicles/Drivers .......................................................................................................................... 20

4.7 Data Capture ............................................................................................................................... 20

4.8 Technology to be Tested ............................................................................................................. 22

4.9 Minimum Performance Requirements ....................................................................................... 22

5 Test Parameters .................................................................................................................................. 24

5.1 General Lab Test Standards ........................................................................................................ 24

5.2 Test Details .................................................................................................................................. 25

5.3 Anomaly Handling ....................................................................................................................... 33

5.4 Test Outcome and Final Report(s) .............................................................................................. 43

5.5 Limitations to Test Plan ............................................................................................................... 44

Attachment A Test Case Sheet (SAMPLE) ............................................................................................. A-1

Attachment B STT Test Procedures ....................................................................................................... B-1

1 Scope .................................................................................................................................................. B-4

2 Reference Documents ........................................................................................................................ B-4

3 Background ........................................................................................................................................ B-4

4 Disclaimer

........................................................................................................................................... B-4

5 Site Overview ..................................................................................................................................... B-4

5.1 Test Track ................................................................................................................................... B-4

5.2 Tolling Equipment Layout .......................................................................................................... B-4

5.3 Site Safety and Security ............................................................................................................. B-5

5.4 Personnel Safety ........................................................................................................................ B-5

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page ii

6 Personnel ........................................................................................................................................... B-5

6.1 Test Engineer ............................................................................................................................. B-5

6.2 Track Inspection ......................................................................................................................... B-5

6.3 Test Vehicle Inspection .............................................................................................................. B-5

6.4 Test Results Validation ............................................................................................................... B-5

6.5 Supporting Personnel ................................................................................................................. B-6

6.6 RFID Tags .................................................................................................................................... B-6

6.7 RFID Reader ................................................................................................................................ B-6

6.8 RFID Cable .................................................................................................................................. B-6

7 Definitions .......................................................................................................................................... B-7

8 Optimized STT Plan ............................................................................................................................ B-8

8.1 Representative Lap .................................................................................................................... B-8

8.2 Test Round 1 – Setup ................................................................................................................. B-9

8.3 Test Round 2 – Setup ................................................................................................................. B-9

8.4 Test Round 3 – Setup ............................................................................................................... B-10

8.5 Test Round 5 – Setup ............................................................................................................... B-10

9 MET STT Application ........................................................................................................................ B-11

9.1 Functions Summary ................................................................................................................. B-11

9.2 Considerations ......................................................................................................................... B-13

Attachment C STT Field Test Procedures .............................................................................................. C-1

1 Scope .................................................................................................................................................. C-4

2 Site Safety and Security ..................................................................................................................... C-4

3 Supporting Personnel ......................................................................................................................... C-4

3.1 Test Director............................................................................................................................... C-4

3.2 Test Lead .................................................................................................................................... C-4

3.3 Vehicle Marshall ......................................................................................................................... C-4

3.4 Test Engineer ............................................................................................................................. C-4

3.5 Drivers ........................................................................................................................................ C-5

4 Equipment (field testing info) ............................................................................................................ C-5

4.1 RFID Antennas ............................................................................................................................ C-5

4.2 RFID Tags .................................................................................................................................... C-5

4.3 RFID Reader ................................................................................................................................ C-5

4.4 RFID Cable .................................................................................................................................. C-5

4.5 Vehicles ...................................................................................................................................... C-6

4.6 Other Infrastructure ................................................................................................................... C-6

5 Pre-test Preparation........................................................................................................................... C-6

5.1 Track Closure .............................................................................................................................. C-6

5.2 Track Inspection ......................................................................................................................... C-6

5.3 Vehicle Inspection ...................................................................................................................... C-6

5.4 Driver Training............................................................................................................................ C-6

5.5 Communication .......................................................................................................................... C-6

6 Preparing Vehicles and Tags .............................................................................................................. C-7

7 Suggested Schedule ........................................................................................................................... C-7

8 Daily Test Preparation ........................................................................................................................ C-8

9 Round 4 Test Procedures ................................................................................................................... C-8

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page iii

9.1 Single Lane Runs ........................................................................................................................ C-8

9.2 Triple Lane Runs ......................................................................................................................... C-9

10 Data Validation................................................................................................................................... C-9

10.1 Collection of Data ....................................................................................................................... C-9

11 Error Handling .................................................................................................................................... C-9

Attachment D NIOP Test Program Protocol Hypothesis Testing .......................................................... D-1

Attachment E Driver Test Sheet ............................................................................................................ E-1

Attachment F Vehicle Test Sheet .......................................................................................................... F-1

Attachment G Vehicle Inspection Checklist .......................................................................................... G-1

Attachment H Track Inspection Checklist ............................................................................................. H-1

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page iv

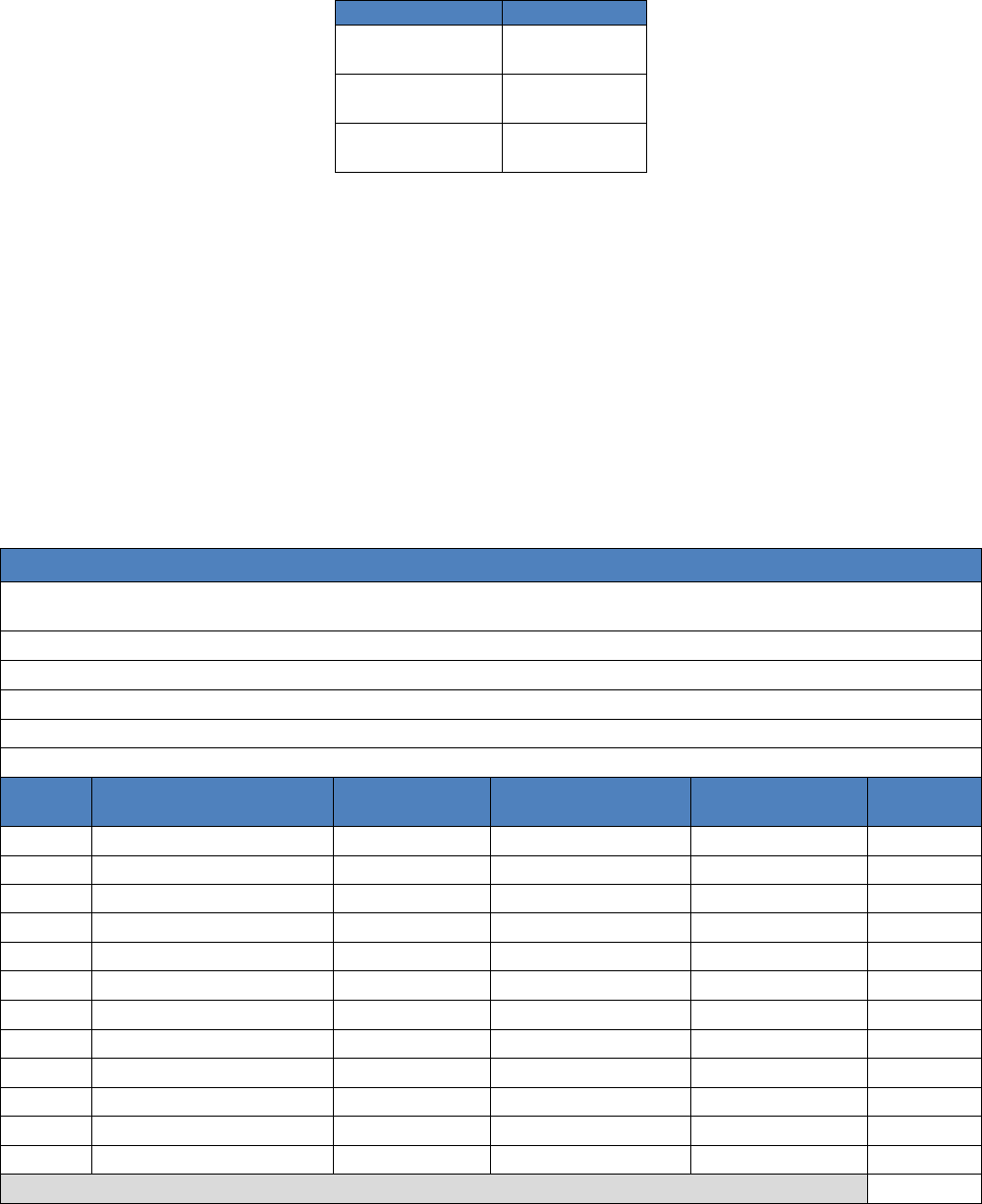

Document History

Revision Date

Revision #

Revised By

Description

4/7/17

1.0

C. Rokoff

Draft Version delivered to

IBTTA, FHWA, and

Vendors

4/24/17

1.1

C. Rokoff

Respond to comments

5/2/17

1.2

C. Rokoff

Add Round 4 and some

formatting clean up

(formatting not in track

changes)

5/4/17

1.3

C. Rokoff

Added statement

regarding NIOP Testing

Goals to Approach, added

export of reader

configuration to

Attachments B & C, and

fixed bullet numbering

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 1

1 Background

The International Bridge, Tunnel and Turnpike Association (IBTTA) was founded in 1932 and is a worldwide

association representing toll facility owners and operators and the businesses they serve. IBTTA has members in

more than 20 countries and on six continents. Through advocacy, thought leadership and education, members are

implementing state-of-the-art, innovative user-based transportation financing solutions to address the critical

infrastructure challenges of the 21st century. IBTTA is headquartered in Washington, D.C.

The IBTTA has volunteered, in partnership with the FHWA, to undertake the mission of establishing a national

interoperable protocol (NIOP) so that toll paying customers may travel anywhere in North America with a single toll

transponder. IBTTA recognizes that many hardware components, software modules, and operational processes are

involved in implementing an Electronic Toll Collection (ETC) system program. The technical focus of this initiative,

however, deals specifically with the system components that provide the ability to automatically, accurately, and in

real-time, communicate and transmit a unique identifier and other data stored from an On-Board Unit (OBU), or

transponder, traveling with a vehicle passing through a toll lane/zone, to readers installed at the roadside.

The Roadside Operations Sub-Committee (ROSC) – a technical working group of the IBTTA – has reviewed

commonly used protocols and developed a short-list of NIOP candidates that appear to be capable of meeting the

technical aspects of the IBTTA North American Toll Interoperability Program Electronic Toll Collection Protocol

Requirements Document (Final NIOP Requirements Document). To develop the short-list, the committee evaluated

protocols against NIOP requirements and provided consideration to candidate protocols that were in common use

by IBTTA member Agencies. The short-listed protocols were accepted by the IBTTA NIOP Steering Committee in July

2014. The short-listed protocols, hereafter referred to as “Candidate Protocols” are the following:

• ISO 18000 6C (also known as ISO 18000 63, or just 6C)

• TDM (also known as IAG or E-ZPass)

• SeGo (also known as ISO 18000 6B+)

The IBTTA recruited three volunteer technology providers (the Vendors), 3M, Kapsch and TransCore who have

committed to sponsor their respective protocols 6C, TDM and SeGo; and support this testing by supplying

equipment and expertise to assist the IBTTA in carrying out the testing. The reader vendors will tune their

equipment during set-up for each round of testing.

IBTTA selected the OmniAir (formerly OmniAir Certification Services) team for technical services to assist in the

conduct and analysis of testing the candidate NIOP protocols. The tolling protocol technology selected out of this

process will facilitate seamless travel by roadway users of tolling facilities using a single on-board device across

North America. During Phase 1 of the NIOP testing, each of the “Candidate Protocols” was verified for Conformance

to their protocols.

This Phase 2 – Parts 1 and 2 Test Plan provides the necessary information to develop and oversee a lab/field

performance testing program for compliant NIOP candidate protocols that supports the IBTTA’s evaluation and

selection process. As required by the FHWA approved plan, a separate NIOP Phase 2 – Part 3 Test Plan will be

developed for the field testing program for Round 6 (if required).

2 Approach

The IBTTA has stipulated over 100 requirements for the NIOP protocol. These represent thousands of combinations

which, as a practical matter, cannot all be tested. The IBTTA has therefore settled on testing what they have

determined to be the most important requirements. These are:

• Handshake degradation of one protocol due to another protocol

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 2

• Tag Read accuracy

• Tag Write accuracy

The goal of these tests is to clearly define and measure these parameters to ensure consistent testing among all

protocols and vendors.

OmniAir developed the Test Approach document in collaboration with IBTTA Roadside Operations Subcommittee,

and testing subgroup members to outline testing strategies that will be used to accomplish the NIOP Testing Goals

that were established by the IBTTA. Using the approved Test Approach document as the guide, we believe the

supporting Test Plan document submitted to the IBTTA for approval conforms to the Test Approach.

2.1 Test Environment

Testing will take place in a Lab and in the “Field”. Lab testing will utilize test vehicles (approximately 1 foot long)

specifically designed to carry tags, at 15 to 100 mph, down a single indoor track through a tag capture zone. Each

test vehicle will carry a single tag of the selected protocol during the test. The height from the lab floor to the

transponder in the STT vehicle is 2.6 feet. Field testing will utilize actual vehicles on a three lane outside test

facility.

The field test will employ lanes of 12 ft. width and the mounting pipe for the antenna(s) will be 17 ft. 6-inch

height from the roadway.

Vendors will provide and position the lab and field antenna or antennas to their

requirements while maintaining the prescribed capture zone. See Section 4.3 for more details on assumptions for

Vendor setup.

2.2 Configuration

Each protocol has a well-defined set of technical specifications and operational parameters for reading from and

writing to tags and it is up to the reader manufacturers and tag suppliers to follow these specifications and

provide the tools that implement the operational parameters. Conformance to the specifications was validated

during Phase 1 of the NIOP Testing. However, it is up to the protocol sponsors (i.e. Vendors) to configure those

tools and establish the physical installation to optimize the system. These configurations can be very different and

produce diverse results (e.g. handshakes, tag selection) for the same installation. For this reason, it is important

that the configuration and mode of operation of the reader is consistent across different test scenarios and

disclosed to ensure certification of the results.

2.3 Rounds

Testing is defined in a series of six “Rounds” designed to methodically determine handshake and accuracy

parameters supported by statistically significant confidence levels. Following is a summary of the Rounds, where

they are performed, and the parameter they are testing.

Table 1 – Summary of Test Rounds

Round

Handshake/Accuracy

Lab/Field

Pass/Fail

1

Handshake

Lab

No

2

Handshake

Lab

Yes

3

Handshake

Lab

No

4

Handshake

Field

No

5

Accuracy

Lab

Yes

6 (TBD)

Accuracy

Field

Yes

2.4 External Factors

There a multitude of external real world factors that can affect the parameters being tested. These range from

driver behavior to RF interference. Nearly all of these are random in nature and as such cannot be duplicated in a

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 3

controlled test environment. Rather than selecting and introducing pseudo-random parameters, the test will

exclude these factors as much as possible to derive a baseline conclusion.

3 Terms and Definitions

The items of this section apply to both lab and field testing.

3.1 Supplied Tags

Tags used for testing will be encoded per current specifications and supplied with the encoded Agency and Tag

Serial Number printed on the tag. SeGo and 6C tags will be “sticker tags” and TDM tags will be hard case with a

battery. All tags will be single protocol of the type to be mounted on the interior windshield of a car. The Tag

Serial Number must be unique for each tag of a given protocol and the Agency and/or Group code should not

correspond to any current or future toll facility. Required quantity of tags is:

• TDM – 50 (Group ID will be 1)

• 6C - 50

• SeGo - 50

3.2 Capture Zone

The “Capture Zone” shall be defined as the length (in feet) measured along the road (or track) in the direction of

travel, beginning at the point where a tag starts to read and ending at the point when the tag stops reading. The

capture zone shall not exceed 18 feet for any of the protocols. The capture zone, as defined, is not a function of

vehicle speed and is determined by moving a properly mounted tag past the antenna in 1 foot increments and

recording the points when the tag starts and stops reading. When measuring the capture zone, the readers must

be set to single lane, single protocol mode when determining the capture zone for that protocol. The reader

would be cycled through each of the three protocols in turn to verify capture zone size for each protocol. The field

capture zone shall be determined by each vendor but must be consistent for all rounds for that vendor’s reader.

The lab testing will use the same length cable, antenna angle, and reader configurations and the OmniAir team

will make any lab modifications necessary to replicate the field capture zones. Alternatively, the vendor can

establish their capture zone in the lab, and then it will be the vendor’s responsibility to make the capture zones in

the field replicate the lab established capture zones.

Vehicles chosen for field testing will be sedans with tag mounting locations of 4.5 ft. +/- 1 ft. from the ground and

windshield angles of 24 degrees +/- 2 degree range to correlate with the lab test vehicles which have a fixed

windshield angle of 24 degrees.

3.3 Tag Memory

This is where information is permanently stored on the tag or can be written to the tag. It is a block (or blocks) of

binary data of a certain length. As far as the protocol is concerned it makes little or no difference what the pattern

of 1’s and 0’s is although there are some restrictions in a few places depending on the tag type. Tag memory for

the three protocols is:

• TDM - A single block of 256 bits.

1. First 96 bits - Read only: pre-programmed with protocol and agency specified information.

2. Next 160 bits - Read/write: data specified by agency

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 4

• 6C -Two blocks

1

:

1. UII - 96 bits of read only: pre-programmed with protocol and agency specified information.

2. User Memory - 512 bits of read/write data specified by agency

• SeGo-Two blocks:

1. First 64 bits - Read only: pre-programmed with protocol and agency specified information.

2. Next 64 bits - Read/write data specified by agency

All protocols store, at a minimum, the Agency ID, and the Tag Serial Number in the pre-programmed memory and

this is the data that will be used to verify a correct tag read in the test. In addition, all protocols can store the day

and time (to the second) that the tag was written to. This will be used to verify that a tag was written to properly.

The users of all three protocols have defined the meaning of the read only parts of the tag as well as the

information to be written to the read/write memory. The format and meaning of this information is given in the

attached documents.

1. TDM Tag Memory Map (24-bit Tag Serial Number)

2. 6C TOC Memory Map (28-bit Tag Serial Number)

3. SeGo Tag Memory Map (24-bit Tag Serial Number)

3.4 Test Parameters

It is necessary to define the terms Read/Only Mode, Read/Write Mode, “Read”, “Write”, Handshake, and accuracy

Pass/Fail as well the method of establishing or measuring these parameters. The definitions are discussed in this

section.

Tag Reading and Writing

The Agency ID and Tag Serial Number can be obtained (read) for any of the protocols in less than 10 milliseconds

(ms). This means that for normal capture zones and vehicle speeds (e.g. 10 ft. & 60 mph) a tag can be read many

times (handshakes) in a single transit through the zone. Readers will generally be set up to read the tag as many

times as possible to aid in lane discrimination. In read/write mode readers will generally identify the tag through a

normal read of the read-only memory and then perform a read/write/verify cycle using read/write memory. If the

write is successful, the reader will continue reading the read-only memory. Therefore, if all goes well a tag will

experience many reads and one write as it transits the capture zone.

* The above description of tag reads and writes is subject to many reader configuration options, and the readers

from each of the Vendors are different with different options. Options include the amount of memory read

and/or written, time sharing among protocols, time sharing among lanes, treatment of a failed write cycle,

handshake reporting, and lane synchronization techniques to name a few. In addition, some readers or protocols

have default parameters that prevent communication or writing to a tag within a set time of the previous

interaction. For this reason, during the tests no tag will transit the same capture zone again in less than one

minute. The following definitions are provided to ensure a common understanding of the terms used in the test.

11

6C also contains the TID block however this additional information is not required by the NIOP nor is it currently used in many 6C

installations.

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 5

Definitions

A. Read – The NIOP requirement is to read a minimum 56 bits of information. A Tag Read is defined, as

obtaining, and reporting the tag data normally required in tolling, defined, or approved by a tolling

organization or necessary for this test. Data required for a “Read” is that which is normal for the protocol.

That is:

o 6C - The entire 96 bits of UII memory

o TDM - The entire 256 bits of tag memory

o SeGo - The entire 64 bit read only page

Vendors must certify that their reader configuration will retrieve this information at a minimum. The

system will report a Read in a single Read Message containing the following information for each

protocol:

o Tag Agency ID

o Tag Serial Number

o Day and Time the tag was first read for that transit. Time is to the one second minimum resolution.

o Antenna Number

o Handshake counts on the above antenna

A Read message is required

to be provided by the test system for every antenna that reads a tag

regardless of the number of handshakes.

B. Write - A Tag Write is defined as a reader successfully storing at least 52 bits of data (IBTTA requirement)

into the tag Read/Write memory in accordance with specifications or practices currently employed at a

toll facility. For our purposes data stored to a tag will follow established specifications:

o 6C - 60 bit minimum into User Memory.

o TDM - 160 bits in accordance with the TDM specification and E-ZPass format.

o SeGo - 64 bit read/write page

Data to be written by the reader to the tag by Vendor Readers for all protocols is:

o Agency = 127 (Omni Air)

o Plaza: Lab = 1; Field = 2

o Lane: Lab = 4; Field = 1,2, or 3 accordingly

o Date and time stamp to one second resolution

The test system will report a Write in a single Write Message to logs containing the following information

for all protocols:

o Tag Agency ID

o Tag Serial Number

o Day and Time the tag was written to. Time is to the second minimum resolutions.

o Antenna Number

o Read/Write memory contents prior to the write (first successful read with each new transponder than

enters the capture zone)

o Read/Write memory contents after the write (last successful read for the transponder before it exits

the capture zone)

For the accuracy tests, the system will verify that the write was successful on each successive transit of

the tag by examination of the time stamp in the memory content prior to write and comparison to the

reported written data from the previous transit of the same tag. The first read will contain the same

contents including date and time from the last time this transponder was written to and the contents of

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 6

the user memory will be compared to the last read for this same transponder. This will require one

additional pass of the tag at the end of each accuracy test. For the Handshake tests, write accuracy is not

critical and the write mode will be verified by the system using the time stamp from successive Write

messages.

C. Handshake - A correct transmission of the tag’s read-only memory from the tag to the reader. The

transmission must involve the complete cycle of interrogation and tag response. A “handshake” is

synonymous with a “Read”.

D. Transaction -A transaction is the transit of a transponder into the capture zone and the transaction

includes all the “handshakes” for that transit.

E. Transponder – the terms transponder, tag and OBU are used interchangeably throughout this document.

F. Read/Only Mode - A mode of operation wherein a protocol performs one or more Reads as the tag

transits the capture zone.

G. Read/Write Mode - A mode of operation wherein a protocol performs one or more Tag Reads and one

Tag Write as a tag transits the toll zone. For purposes of this test, each successive Tag Write must contain

the date and time to the second.

H. Accuracy - A “Read OK” will be logged for a tag each time it transits a capture zone and results in a

successful Read Message when in read only mode or a successful Read Message or Write Message when

in read/write mode. Failure to generate either of these messages will result in logging a “No Read” for

that transit. A ”Write OK” will be logged for a tag each time it transits a capture zone and results in a

successful Write message when in Read/Write mode. Failure to generate this message will result in

logging a “No Write” for that transit. The total count of Read OK and Write OK will be used to determine

the PASS or FAIL of the accuracy test.

3.5 Reader Configuration

Vendors must install the antennas and readers and configure the readers for the tests. There are two factors that

are important to these tests; Protocol Modes and Lane Modes. The

"Candidate Protocol Reader" is the reader

supplied by the sponsoring vendor promoting their Candidate Protocol for the purpose of evaluation of that

protocol.

The “Local Protocol” for each multi-protocol reader will be the Read Only Protocol being tested

alongside the Candidate Protocol in each set of trials. These are listed below for each Vendor.

Table 2 - Protocol Modes

Kapsch

3M

TransCore

TDM

Read/Write

(Candidate)

Read Only

(Local)

Read Only

(Local)

6C

Read Only

(Local)

Read/Write

(Candidate)

Read Only

(Local)

SeGo

Read Only

(Local)

Read Only

(Local)

Read/Write

(Candidate)

Table 3 - Lane Modes

Kapsch

3M

TransCore

Single

6C

TDM

TDM

Single

SeGo

SeGo

6C

Single and Triple

TDM/6C

6C/TDM

SeGo/TDM

Single and Triple

TDM/SeGo

6C/SeGo

SeGo/6C

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 7

Readers must be strictly configured for the actual number of lanes in which the test is run as shown in the

following chart:

Table 4 - Lane Configuration

Round

Configuration

Round 1 (Lab)

Single Lane

Round 2 (Lab)

Single Lane

Round 3 (Lab)

Single Lane

Round 4 (Field) - 1 lane

Single Lane

Round 4 (Field) - 3 lanes

Triple Lane

Round 5 (Lab)

Single Lane

3.6 Reader Interface

This defines the commands and messages between a reader and a computer that allow the programmer to

establish the way reads and writes will occur consistent with the options allowed by the protocol. The commands

and responses are defined in the reader interface control document (ICD). The ICD also defines commands that

allow the programmer to establish operational parameters that are not a function of the tag protocol but are

necessary for the correct operation of the system. Examples are time slicing and power levels for the various

protocols. It is not assumed that the ICD’s are the same for each protocol. For example, it is quite possible that the

ICD for 6C on one reader is different from the ICD for 6C on another.

3.7 Application Program

Application program - This is the software developed by MET Labs or others to interact with the reader via the ICD

commands to initiate a test, establish a mode, define a protocol, tabulate data, etc. and ultimately produce useful

test results. An understanding of the ICD is necessary to develop this software.

3.8 Speed Tolerance

This is the variance from the target speed under test for which test results will be included in the analysis. For 60

mph, the speed tolerance will be +- 5% and include speeds between 57 – 63 mph. For 80 mph, the speed

tolerance will be +- 5% and include speeds between 76 – 84 mph. For the 100 mph speed, the speed tolerance will

also be +- 5% rounded to the nearest whole number with a target speed of 95 to avoid including trials that exceed

100 mph. Speeds between 90 and 100 will be included, with a minimum average of at least 95 mph which will be

monitored throughout the trials to ensure the average will be on the higher side of the speed tolerance. Any trials

that are slower or faster than the established speed tolerance limits above will be excluded and additional

replacement trials will be done. The concern with exceeding 100 mph is for Round 5 where performance is

measured, and for a missed read and/or write that exceeded the maximum 100 mph speed requirement.

4 Equipment and System Test Requirements and Plan

4.1 Introduction

The purpose of this document is to provide the overall plan of the lab tests approved by IBTTA to establish the

handshake and read/write performance of the three (3) candidate protocols. This plan addresses detailed

attributes of the testing process including test objectives, test prerequisites, test controls, and a discussion of

the various test scenarios necessary to achieve the objectives. An additional document – the Test Procedures –

will detail the execution of the individual test steps to carry out this plan.

Testing will be performed with each protocol as the “Candidate” and one other protocol as the “Local” protocol

with dual protocol readers. The testing will determine if the handshake performance degrades within an

allowable limit in dual protocol mode, and the performance testing will determine if the read and read/write

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 8

accuracy are within the IBTTA specified performance standards. The performance requirements from the IBTTA

Final Requirements Document, Section 3.7 are shown in Section 4.9 of this Test Plan for each reader and tag

protocol combination under test.

Throughout the duration of the testing and reporting, it is not the intent of this testing to compare one vendor’s

technology/equipment to other vendor’s systems/results.

4.2 Test Schedule

The OmniAir Team will coordinate with the ROSC and the three reader vendors, 3M, Kapsch, and TransCore in

finalizing the proposed test schedule. Lab testing will take place at MET Labs facility in Baltimore, Maryland. The

first three rounds will be tested in back-to-back sequence for each reader vendor to limit site visits required by

vendors. The Round 4 field testing will be done at the Capital Raceway in Crofton, Maryland. The Round 6 (if

required) field testing will require a different test track to accommodate the 100 mph requirement which will be

researched as part of the Round 6 definition. More details on field testing will be covered in the separate Field

Test Plan.

Table 5 - Test Schedule by Rounds

Test Type

Description

Dates

Location

Round 1

Single Protocol Reader Mode, Local Protocol Handshake

TBD

Lab

Round 2

Dual Protocol Reader Mode, Local Protocol Handshake

Degradation

TBD

Lab

Round 3

Dual Protocol Reader Mode, Tag of Candidate Protocol

Handshake Data Collection (For comparison to Field)

TBD

Lab

Round 4

Dual Protocol Reader Mode, Tag of Candidate Protocol

Handshake Data Collection, Single and Triple Lane (For

comparison to Lab)

TBD

Field

Round 5

Dual Protocol Reader Mode, Candidate Protocol

Performance Testing for Read and Write

TBD

Lab

Round 6

Dual Protocol Reader Mode, Performance Testing for

Read and Write, Triple Lane only

TBD

Field

4.3 Pre-Testing Setup and Assumptions

The OmniAir Team will be comprised of OmniAir staff, TTI and EVC consultants, MET Labs and IBI testing

personnel. AVI reader manufacturers 3M, Kapsch and TransCore staff are NOT part of the OmniAir Team but will

be required to come to MET Labs in Baltimore, Maryland and the field track site Capital Raceway in Crofton, MD

for setup, configuration and tuning of their readers and antennae before each Round of testing and be available

to witness the lab and field testing. The AVI reader vendor representatives must be present to witness testing

but are not considered active members of the OmniAir Team. TTI will coordinate schedules with IBTTA’s ROSC so

that IBTTA has an opportunity to make plans to attend. In any event, at least one weeks’ notice will be given to

IBTTA prior to the start of any component of the test program.

In order to bring the assessment to a timely and successful conclusion, the OmniAir Team would require the

following activities and test controls to be performed:

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 9

1) The reader system will be set up on-site at MET Labs or the Round 4 field track site Capital Raceway, by the

AVI reader vendor prior to each round of lab or field testing, in accordance with existing IBTTA and vendor

requirements in terms of site surveys, etc. Tuning parameters shall be typical of a real-world ORT

installation. Vendors will be expected to fully document their reader configurations including time and/or

frequency sharing in all configurations to ensure that it is representative of a production configuration and

not designed solely to support this testing.

2) The vendor provided antennas will be mounted by the OmniAir Team or Vendor ahead of their testing date

on the same mounting apparatus for all vendors for each round of testing, and validated by the vendors for

height and angle. An electric scissor lift model Skipjack SJIII 3215/19 will be rented by MET for the OmniAir

Team and the Reader Vendors to use for equipment setup.

3) Each vendor is required to define a nominally identical capture zone for each protocol up to a maximum of

18 feet. Prior to data capture, static testing to recognize each antenna footprint will be carried out and will

be documented for incorporation into the final test report. Test vehicles will be placed at various points

along the test track to map out the capture zone and the Test Engineer will determine where the capture

zone starts and ends.

4) The Vendor can select the field antenna height appropriate to their equipment, the capture zone

requirements above, and the height of the antenna to the transponder height in the field vehicles. Note that

the field antenna mounting pipe is 17.5 feet from the roadway. The antenna height in the lab will be

adjusted to a lower height to account for the difference between the field vehicle transponder height and

the lab test vehicle transponder height (approximately 2.6 feet from the ground). The vehicles to be rented

for field testing have an average transponder height of 4.5 feet from the ground, so the antenna should be

mounted 1.9 feet lower in the lab.

5) Each Reader Vendor will set the antenna angle they deem appropriate for the field setting and specified

angle will be replicated in the lab (or the lab antenna angle chosen can be replicated to the field).

6) The length of the cable from the reader to the antenna will be determined by each Reader Vendor and they

may use whatever length cable they deem appropriate in the lab. Likewise, in the field configuration, the

Vendors can determine whatever length cables and types of cabling they deem appropriate for that setting

and distances.

7) The Reader Vendors will configure their Reader for their Candidate protocol operating in read/write mode

and configured to write at least 52 bits of data and configure the Local protocol under test in read only

mode.

8) The Reader Vendor will establish system properties such as power for each protocol according to the

Vendor’s normal lane configuration. MET will validate the settings.

9) Radio Frequency (RF) interference existing within the locality of the lab and field test sites will have to be

identified prior to testing. This will be done using an RF spectrum analyzer which will be on site both before

and during the testing period. Any unusual activity occurring during testing will be reported. All RF

interference testing will be completed by the respective Vendors or the OmniAir team. Reader vendors will

be on-site during actual testing.

10) All transaction data gathered during testing will be readily available to the OmniAir Team and will be made

available for the Candidate reader to the Sponsoring Vendor only, for analysis and interpretation on site.

11) In order to limit an exhaustive set of parameters the OmniAir Team will adhere to manufacturer’s

recommendations in terms of tag placement on windshield in field tests, etc.

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 10

12) Support staff from AVI Reader Vendors (3M, Kapsch and TransCore) will be available to the OmniAir Team

prior to testing to confirm and verify reader parameters, as well as during the test conduct stage should any

technological issues arise.

13) The OmniAir Team will supply limited different types of vehicles for the field test that match the windshield

angle specified in Section 3.2 of this document.

14) During testing, only the reader system being tested will be active and all other reader systems will be

powered off.

15) After being mounted in a test vehicle, each tag shall be read using the tag tester/handheld reader to ensure

they are functional.

16) Due to timeout limitations, the time between gantry transits needs to be controlled so that the reader sees

the tag as a new occurrence every transit (default time is 60 seconds since the last time the tag was written).

This will require enough test vehicles to avoid the same transponder travelling more often than every 60

seconds.

17) Anomalies or apparent failures will be investigated by the OmniAir Team as further detailed in Section 5.3.

All anomalies or failures will be fully documented for inclusion in the final report.

4.4 Test Rounds and Features to be Tested

For each of the following test objectives and Test Rounds, the following performance parameters will be

analyzed and documented: handshake counts, successful reading of select transponder information and

successful writing to transponders of select reader information (in accordance with each protocol’s

specification). For Performance testing in Rounds 5 and 6, a single accurate read will count as a pass, and a

single accurate write will count as a pass for each trial.

Each test round contains multiple scenarios. Each scenario

consists of multiple trials (ta

g transits). A test cycle consists of a set of scenarios and rounds conducted with one

Candidate protocol.

All test scenarios will be conducted using a single reader in the lab and multiple readers in the field (if required

by the vendor), with separate test cycles for each of the three readers (3M, Kapsch and TransCore) The readers

will be setup in dual protocol mode except Round 1 which uses single protocol mode. The antennae (s) to be

used in the lab and the field will be specified by each of the AVI reader vendors. A combination of sticker (6C and

SeGo) and interior hardcase (TDM) transponders will be used depending on the test scenario. Exterior

transponders are not included in this testing.

Each lab and field test scenario in Rounds 1 - 5 will include 50 transactions/read attempts for each Candidate

Reader (6C, TDM and SeGo) with a combination of Reader Protocols (6C, TDM and SeGo), Tag Protocols (6C,

TDM and SeGo) and Speeds (60, 80 and/or and 100 mph depending on the Round). Round 5 lab test scenarios

will have a minimum of 1609 transactions/read and read/write attempts.

Part 1 Testing:

Round 1 Test Objective: Determine Local protocol handshakes in single protocol mode using each Candidate

Protocol reader (e.g. 3M reader reading only TDM, and then only SeGo).

The purpose of this test is to establish the baseline handshake values for each Local protocol using each

Candidate reader. The mapping of Local protocols to each Candidate reader are as follows:

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 11

Table 6 - Reader/Protocols for Round 1

Candidate Reader

Local Protocols

6C (3M)

SeGo

TDM

SeGo (TransCore)

6C

TDM

TDM (Kapsch)

6C

SeGo

This test will take each Candidate reader, configure it to operate in a read-only single protocol mode for each of

the Local protocols specified above, and capture handshake data for that Local protocol to establish the Local

protocol baselines (one for each Local protocol) associated with that Candidate reader. Transponders utilized

will match the Local protocol for which the reader is configured at the time.

Round 1 Test Objective will test handshake performance in the lab, where there is one lane, and all test vehicles

drive at designated speeds of 60 or 100 MPH. There will be 50 trials for each Candidate Reader at each Local

Protocol for the Candidate Protocol Reader, with different test scenarios using different local protocols. The tag

protocol will match the Reader Local protocol in use for each scenario. The speeds tested will alternate from

scenario to scenario between 60 and 100 mph. There are a total of 600 trials in Round 1.

After this test, we will have handshake data for each Local protocol from the reader provided by each candidate.

Table 7 - Round 1 Scenarios

Round 1 – Single Protocol Baseline

Purpose: To determine Local protocol handshakes in single protocol mode using the readers from each candidate (e.g.

3M reader reading only TDM, and then only SeGo).

Entrance Criteria: Equipment installed and configured.

Success Criteria: This is not a pass/fail test.

Test Location: LAB

Lane Configuration: ORT (Single Lane)

Reader Configuration: Single Protocol

Scenario

#

Candidate Reader

Reader Protocol

(Read-Only)

Tag Protocol Speed Trials

1

6C (3M)

TDM (only)

TDM

100

50

2

6C (3M)

TDM (only)

TDM

60

50

3

6C (3M)

SeGo (only)

SeGo

100

50

4

6C (3M)

SeGo (only)

SeGo

60

50

5

TDM (Kapsch)

6C (only)

6C

100

50

6

TDM (Kapsch)

6C (only)

6C

60

50

7

TDM (Kapsch)

SeGo (only)

SeGo

100

50

8

TDM (Kapsch)

SeGo (only)

SeGo

60

50

9

SeGo (TransCore)

6C (only)

6C

100

50

10

SeGo (TransCore)

6C (only)

6C

60

50

11

SeGo (TransCore)

TDM (only)

TDM

100

50

12

SeGo (TransCore)

TDM (only)

TDM

60

50

600 Trials

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 12

Round 2 Test Objective: Determine Local protocol handshake degradation in dual protocol operation.

Measure handshake performance for all three (3) Local Candidate protocols 6C, TDM and SeGo while the reader

is configured for performing read and write for the Candidate protocol and read-only for the Local protocols in

dual protocol operation.

The Round 2 Test Objective will test handshake performance in the lab, and all test vehicles drive at designated

speeds of 60 or 100 MPH. There will be 50 trials for each Candidate Reader in dual protocol mode

with the

Candidate Protocol in read/write mode and Local protocol in read only mode, and different scenarios using

different Local Protocols.

The tag protocol will match the Local protocol in use for each scenario. The speeds

tested will alternate between scenarios of 60 and 100 mph. There are a total of 600 trials in Round 2.

After Round 2 testing, we can determine how much the Local protocols are degraded in a dual protocol mode,

for all three candidate protocols. Analysis will be done to determine the degradation of each Local protocol in

dual protocol mode compared to Round 1 results of the same Local protocol in single protocol mode to

determine the percentage of degradation. If a Local protocol’s handshakes are degraded more than 60%

compared to the single protocol handshakes, then this protocol will fail as it cannot be supported adequately in

dual protocol mode which is an IBTTA requirement.

Table 8 - Round 2 Scenarios

Test Round 2 – Dual Protocol Handshake Degradation

Purpose: To determine Local protocol handshake degradation in dual protocol operation.

Entrance Criteria: Round 1 Complete

Success Criteria: This is a pass/fail test. If handshake degradation is less than or equal to 60%, for all combinations,

the protocol passes.

Test Location: LAB

Lane Configuration: ORT (Single Lane)

Reader Configuration: Dual Protocol

Scenario

#

Candidate Reader

Reader

Protocol 1

(Read/Write)

Reader Protocol 2

(Read-Only)

Tag Protocol Speed Trials

1

6C (3M)

6C

TDM

TDM

100

50

2

6C (3M)

6C

TDM

TDM

60

50

3

6C (3M)

6C

SeGo

SeGo

100

50

4

6C (3M)

6C

SeGo

SeGo

60

50

5

TDM (Kapsch)

TDM

6C

6C

100

50

6

TDM (Kapsch)

TDM

6C

6C

60

50

7

TDM (Kapsch)

TDM

SeGo

SeGo

100

50

8

TDM (Kapsch)

TDM

SeGo

SeGo

60

50

9

SeGo (TransCore)

SeGo

6C

6C

100

50

10

SeGo (TransCore)

SeGo

6C

6C

60

50

11

SeGo (TransCore)

SeGo

TDM

TDM

100

50

12

SeGo (TransCore)

SeGo

TDM

TDM

60

50

600 Trials

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 13

Round 3 Test Objective: Measure handshake data of the candidate protocol under test for comparison to

similar field data.

Round 3 will measure handshake performance for all three (3) Candidate protocols 6C, TDM and SeGo

performing read and write with the Candidate Reader which is set for read-only for the Local protocols in dual

protocol operation, for comparison to similar field data to be collected in Round 4. See Attachment C – Draft of

National Interoperability Test Program Protocol Hypothesis Testing for the statistical approach to comparing

Rounds 3 and 4.

Round 3 Objective is to gather handshake data in the lab, where there is one lane, and all test vehicles drive at

designated speeds of 60, 80 or 100 mph. There will be 50 trials for each Candidate Reader in dual protocol mode

using the Reader Protocol that matches the Candidate Reader at each Local Protocol for the Candidate Protocol

Reader. The tag protocol will match the Candidate protocol in use for each scenario. The speeds tested will

alternate between 60, 80 and 100 mph. There is a total of 900 trials in Round 3. The number of scenarios has

been changed from the Test Approach document to include an additional speed of 80 mph to match the revised

upper speed that will be used in the field Round 4 data gathering. This was based on preliminary correlation

testing done between the lab and field where results showed that for plotted handshake counts for each

protocol vs. speed the results follow a predicted path. The additional 80 mph speed will not be added to the

previous Rounds 1 and 2 as unnecessary for measuring the handshake degradation which is in the Lab only.

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 14

Table 9 - Round 3 Scenarios

Test Round 3 – Lab Handshake Correlations

Purpose: To measure handshake data of the candidate protocol under test for comparison to similar field data.

Entrance Criteria: Round 2 Complete

Success Criteria: This is not a pass/fail test.

Test Location: LAB

Lane Configuration: ORT (Single Lane)

Reader Configuration: Dual Protocol

Scenario

#

Candidate Reader

Reader Protocol 1

(Read/Write)

Reader Protocol 2

(Read-Only)

Tag Protocol Speed Trials

1

6C (3M)

6C

TDM

6C

60

50

2

6C (3M)

6C

TDM

6C

80

50

3

6C (3M)

6C

TDM

6C

100

50

4

6C (3M)

6C

SeGo

6C

60

50

5

6C (3M)

6C

SeGo

6C

80

50

6

6C (3M)

6C

SeGo

6C

100

50

7

TDM (Kapsch)

TDM

6C

TDM

60

50

8

TDM (Kapsch)

TDM

6C

TDM

80

50

9

TDM (Kapsch)

TDM

6C

TDM

100

50

10

TDM (Kapsch)

TDM

SeGo

TDM

60

50

11

TDM (Kapsch)

TDM

SeGo

TDM

80

50

12

TDM (Kapsch)

TDM

SeGo

TDM

100

50

13

SeGo (TransCore)

SeGo

6C

SeGo

60

50

14

SeGo (TransCore)

SeGo

6C

SeGo

80

50

15

SeGo (TransCore)

SeGo

6C

SeGo

100

50

16

SeGo (TransCore)

SeGo

TDM

SeGo

60

50

17

SeGo (TransCore)

SeGo

TDM

SeGo

80

50

18

SeGo (TransCore)

SeGo

TDM

SeGo

100

50

900

Trials

Round 4 Data Gathering Objective: Gather handshake data of the candidate protocols for comparison to

similar lab data.

NOTE: Modification of the upper speed limit from 100 mph to 80 mph from the Test Approach was made as

described in Round 3.

Measure handshakes for all three (3) Candidate protocols 6C, TDM and SeGo performing read and write with the

Candidate Reader and read-only for the local protocols in dual protocol operation, for comparison to similar lab

data previously collected in Round 3.

Round 4 Test Objective will test handshake performance in the field, where there will be single lane and triple

lane testing, and all cars drive at designated speeds. There will be 50 trials for each Candidate Reader using the

Reader Protocol that matches the Candidate Reader and alternating between the other two local protocols for

dual protocol mode. The tag protocol will match the Candidate protocol in use for each scenario. The speeds

tested will alternate between 60 and 80 mph. The addition of the triple lane testing doubles the number of trials

in this Round 4 to 1200 trials.

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 15

Table 10 - Round 4 Scenarios

Test Round 4 – Field Variable Correlation

Purpose: To measure handshake data of the candidate protocols for comparison to similar lab data.

Entrance Criteria: Round 3 Complete

Success Criteria: This is not a pass/fail test.

Test Location: FIELD

Lane Configuration: ORT (Single and Triple Lane)

Reader Configuration: Dual Protocol

Scenario

#

Candidate Reader

Reader Protocol 1

(Read/Write)

Reader

Protocol 2

(Read-Only)

Tag Protocol Speed Vehicles Trials

1

6C (3M)

6C

TDM

6C

60

Single

50

2

6C (3M)

6C

TDM

6C

60

Triple

50

3

6C (3M)

6C

TDM

6C

80

Single

50

4

6C (3M)

6C

TDM

6C

80

Triple

50

5

6C (3M)

6C

SeGo

6C

60

Single

50

6

6C (3M)

6C

SeGo

6C

60

Triple

50

7

6C (3M)

6C

SeGo

6C

80

Single

50

8

6C (3M)

6C

SeGo

6C

80

Triple

50

9

TDM (Kapsch)

TDM

6C

TDM

60

Single

50

10

TDM (Kapsch)

TDM

6C

TDM

60

Triple

50

11

TDM (Kapsch)

TDM

6C

TDM

80

Single

50

12

TDM (Kapsch)

TDM

6C

TDM

80

Triple

50

13

TDM (Kapsch)

TDM

SeGo

TDM

60

Single

50

14

TDM (Kapsch)

TDM

SeGo

TDM

60

Triple

50

15

TDM (Kapsch)

TDM

SeGo

TDM

80

Single

50

16

TDM (Kapsch)

TDM

SeGo

TDM

80

Triple

50

17

SeGo (TransCore)

SeGo

6C

SeGo

60

Single

50

18

SeGo (TransCore)

SeGo

6C

SeGo

60

Triple

50

19

SeGo (TransCore)

SeGo

6C

SeGo

80

Single

50

20

SeGo (TransCore)

SeGo

6C

SeGo

80

Triple

50

21

SeGo (TransCore)

SeGo

TDM

SeGo

60

Single

50

22

SeGo (TransCore)

SeGo

TDM

SeGo

60

Triple

50

23

SeGo (TransCore)

SeGo

TDM

SeGo

80

Single

50

24

SeGo (TransCore)

SeGo

TDM

SeGo

80

Triple

50

1,200

Trials

The test results collected in Part One will be analyzed to determine:

1. If the Local protocols are not degraded more than the allowable maximum of 60% (using Test Round 1

and Test Round 2 results).

2. If testing in the lab accurately replicates testing in the field (using Test Round 3 and Test Round 4

results).

3. If the vehicle configuration (one vehicle at a time under the gantry vs. three vehicles side-by-side) results

in different performance (number of handshakes) levels (using Test Round 4 results).

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 16

Part Two Testing:

Round 5 Test Objective: Determine read and write performance of candidate protocols under various

configurations.

Determine read and write performance for all three (3) Candidate protocols 6C, TDM and SeGo performing read

and write with the Candidate protocol configured read-only for the Local protocols in dual protocol operation.

For planning Part Two of the performance testing, OmniAir assumes the lab will be shown to be an acceptable

surrogate for the field based on results of the Part One tests. This assumption makes it possible to perform most

of the read and write performance tests in the lab.

Round 5 Test Objective will measure read and write performance in the lab, where there is one lane, and all test

vehicles drive at designated speeds of 60 and also 100 MPH. There will be 1,609 – 3,812 trials for each Candidate

Reader using the Reader Protocol that matches the Candidate Reader

at each Local Protocol for the Candidate

Protocol Reader in

dual protocol mode. The tag protocol will match the Candidate protocol in use for each

scenario. The speeds tested will alternate between 60 and 100 mph between different scenarios. The increased

number of trials gives us the amount necessary for statistical significance. There is a total of 19,308 - 45,744

trials in Round 5.

Note: All twelve of these test scenarios will be performed, regardless of outcome of previous Round 5 tests.

Each test will result in: a pass; a fail; or an inconclusive result. A pass or fail can occur at an intermediate point

(with as little as 1,609 trials) if the number of trial failures meets the criteria in Table 18 of this Test Plan.

Table 11 - Round 5 Scenarios

Test Round 5 – Read and Write Performance

Purpose: To determine read and write performance of candidate protocols under various configurations.

Entrance Criteria: Part One Complete & Positive Lab/Field Correlation Shown

Success Criteria: See discussion

Test Location: LAB

Lane Configuration: ORT (Single Lane)

Reader Configuration: Dual Protocol

Scenario

#

Candidate Reader

Scenarios

Reader Protocol 1

(Read/Write)

Reader Protocol 2

(Read-Only)

Tag Protocol Speed Trials

1

6C (3M)

6C

TDM

6C

100

1,609-3,812

2

6C (3M)

6C

TDM

6C

60

1,609-3,812

3

6C (3M)

6C

SeGo

6C

100

1,609-3,812

4

6C (3M)

6C

SeGo

6C

60

1,609-3,812

5

TDM (Kapsch)

TDM

6C

TDM

100

1,609-3,812

6

TDM (Kapsch)

TDM

6C

TDM

60

1,609-3,812

7

TDM (Kapsch)

TDM

SeGo

TDM

100

1,609-3,812

8

TDM (Kapsch)

TDM

SeGo

TDM

60

1,609-3,812

9

SeGo (TransCore)

SeGo

6C

SeGo

100

1,609-3,812

10

SeGo (TransCore)

SeGo

6C

SeGo

60

1,609-3,812

11

SeGo (TransCore)

SeGo

TDM

SeGo

100

1,609-3,812

12

SeGo (TransCore)

SeGo

TDM

SeGo

60

1,609-3,812

19,308 - 45,744

Trials

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 17

Part Three Testing:

Round 6 Test Objective: Determine read and write performance of candidate protocols when three vehicles

are simultaneously in the ORT zone.

After completion of Parts One and Two, the following data will be available for consideration by IBTTA:

1. The results of the lab testing in Part Two. This will include which read and write tests have passed,

failed, or were inconclusive.

2. Whether there are correlations proven in Part One (Rounds 3 and 4) that testing is necessary to cover all

the required variable combinations (e.g. single lane vs. three lane).

3. An accurate current budget, based on the cost and schedules to complete the lab testing in Part Two.

Note this could vary significantly based on Part Two’s range of required tests (19,308 – 45,744 trials).

Using the information in the list above, OmniAir will work with IBTTA to finalize the tests to be run in Part Three,

including determination of:

1. Which protocols will be field tested (after consideration of the pass/fail/inconclusive results in Part

Two).

2. Which combinations of variables remain to be tested (after consideration of the correlation testing in

Part one – single vs. three lanes, speed).

See future deliverable, Phase 2 – Part 3 Test Plan, for Round 6 details.

4.5 Transponders

As detailed in the test scripts, each Test Objective is comprised of twelve (12) – twenty-four (24) Scenarios and

uses a variety of transponders that are rotated at various stages. For Rounds 1-3 and Round 5 there will be 10

test vehicles with the transponders of the protocol under test that will be run 5 times each to reach the 50 trials

for each scenario. Rounds 4 and 6 will have a multiple of 3 cars, and the transponders will be reused within each

vendor’s testing cycle and will be detailed in the Field Test Plan. The total number of transponders, by type,

required for each scenario is shown below:

Table 12 - Quantity of Transponders per Scenario for Rounds 1 and 2

Scenario

6C Qty

TDM Qty

SeGo Qty

1

10

2

10

3

10

4

10

5

10

6

10

7

10

8

10

9

10

10

10

11

10

12

10

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 18

Table 13 - Quantity of Transponders per Scenario for Round 3

Scenario

6C Qty

TDM Qty

SeGo Qty

1

10

2

10

3

10

4

10

5

10

6

10

7

10

8

10

9

10

10

10

11

10

12

10

13

10

14

10

15

10

16

10

17

10

18

10

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 19

Table 14 - Quantity of Transponders per Scenario for Round 4

Scenario

6C Qty

TDM Qty

SeGo Qty

1

10

2

10

3

10

4

10

5

10

6

10

7

10

8

10

9

10

10

10

11

10

12

10

13

10

14

10

15

10

16

10

17

10

18

10

19

10

20

10

21

10

22

10

23

10

24

10

Table 15 - Quantity of Transponders per Scenario for Round 5

Scenario

6C Qty

TDM Qty

SeGo Qty

1

10

2

10

3

10

4

10

5

10

6

10

7

10

8

10

9

10

10

10

11

10

12

10

Spares of each transponder type will also be on hand in the event of transponder failure.

When not mounted in test vehicles, transponders will be stored in a read-prevention container. During test case

setup, the required transponders will be removed from the read-prevention container and mounted in the test

vehicle using the appropriate locking strips or adhered to windshield glass for stickers. The Test Case Sheet (see

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 20

Attachment A) will be filled out to document transponder assignment. Attachment A will also be used to

manually track each iteration of each test case and note any observed anomalies.

The test case setup/staging area will be located to prevent any potential transponder reads during the setup

process.

Upon removal from a test vehicle (either when required based on transponder rotation or due to transponder

failure), transponders will be returned directly to the read-prevention container.

4.6 Vehicles/Drivers

At the start of each day’s field testing in Round 4 and Round 6 (if executed), the Driver Test Sheet (Attachment

D) and Vehicle Test Sheet (Attachment E) will be completed for inclusion in the test report.

4.7 Data Capture

Each reader system will be connected to a laptop for data capture purposes.

The laptop will be connected to the lane controller port(s) of the reader(s). The laptop will be configured with

software that will capture and log all messages from the reader in their original format with an associated

date/time stamp as well as identifiers for the reader and channel on which the message was received. In

addition, the software will translate the transponder read and write messages into readable ASCII format with

delimiters between all fields to allow for easy identification of all transponder data elements as well as to ease

importing into other software (e.g., MS Excel) for analysis purposes.

There is a generic minimum number of bits to be read and written for all protocols under test as specified in the

Final Draft NIOP Requirements Document v2.2014.09. final amended CLEAN Section 3.8.1.2:

Fixed Data Storage – Minimum Requirements – Read

While the fields below are proposed for NIOP, they are not a requirement for NIOP testing. Each protocol

currently supports Agency ID and Tag Serial Number at a minimum and it is those fields that we are using to

verify the read. The VersionID and Vehicle Class/Profile fields will not be used.

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 21

Table 16 – NIOP Data Fields

ID Name

Proposed #

bits

# Possible values Description Comments

READ ONLY

R1 VersionID 4 16 NTP version

Used to track to which

version the memory map

conforms

R2 Agency ID 12 4,096

Operator or Agency code for

the tag issuer, which provides a

unique identifier for each tag

issuer

This new field combines the

previously separate StateID

and AgencyID fields

R3

Serial

Number

26 67,108,864

A unique value for the tag, to

differentiate it from all other

tags issued by the tag issuer

R1 +R2+R3

SUBTOTAL

42 274,877,906,944

R5

Vehicle

Class/Profile

11 2,048

For toll systems that cannot

determine class at the lane

Vehicle Type: 5 bits (TBD: 32

possible values)

Vehicle Axles: 4 bits (0-15)

Vehicle Weight: 1 bit (<7k lbs /

>=7k lbs)

Vehicle rear tires: 1 bit

(single/dual)

Read

TOTAL

53

READ OR WRITE*

WR1

Vehicle

Occupancy

3 8

For HOV declaration, for use in

HOT/managed lanes

Config: TBD

000: Not a switchable tag

001: SOV

010: HOV2

011: HOV3

100: Reserved for future use

101: Reserved for future use

110: Reserved for future use

111: Reserved for future use

Read or

Write

TOTAL

3

*Depending on switching method, this could be a read or write solution

Write

ID Name

Proposed #

bits

# Possible values Description

W1 Agency ID 12 4,096

This provides a unique

identifier for each toll

operator

The Agency ID of whoever is

writing to the tag

W2 Scratch pad 40 1,099,511,627,776

This section is free for

agencies to use as they wish.

Possible usage would be

Plaza ID: 7 bits

Lane ID: 5 bits

Date/Time: 25 bits (seconds

since Jan 01 00:00:00)

Occupancy: 3 bits

This can be increased if there

is no performance impact.

The displayed value

represents the minimum

requirement

IBTTA NIOP Phase 2 – Parts 1 and 2 Test Plan

Version 1.3 05/4/2017 Page 22

Write

TOTAL

52

TOTAL

BITS RQD

108

4.8 Technology to be Tested

The following equipment will be tested in the lab and field settings, coupling Candidate and Local technologies,

e.g. TDM Transponder with 3M Reader and SeGo Transponders with Kapsch TDM Reader.

• 3M

o 3M Toll RFID 6204 Multiprotocol Readers

o 3M 6C Sticker Transponder

• Kapsch

o Kapsch Janus MPR2 Reader

o Kapsch Hardcase Interior Transponders

• TransCore

o TransCore Encompass 6 Multiprotocol Reader

o TransCore SeGo Sticker Transponders

4.9 Minimum Performance Requirements

The performance requirements from the IBTTA Final Requirements Document, Section 3.7, are shown

below for each reader and tag protocol combination under test in this Test Plan.

For properly equipped vehicles passing completely through the Capture Zone, the RFID subsystem shall