How to Develop the Right Research

Questions for Program Evaluation

Learning objectives

By the end of this presentation, you will be able to:

• Understand the importance of research questions

• Understand the four basic steps for developing research

questions

• Write research questions for different types of evaluation

designs (i.e., process evaluation and outcome evaluation)

Why are research questions important?

• Foundation of a successful

evaluation

• Define the topics the

evaluation will investigate

• Guide the evaluation planning

process

• Provide structure to evaluation

Research

Questions

Data

Collection

Analysis

Conclusions

Questionnaire

Design

activities

Steps for developing research questions

• Step 1: Develop a logic model to clarify program design

and theory of change

• Step 2: Define the evaluation’s purpose and scope

• Step 3: Determine the type of evaluation design: process

or outcome

• Step 4: Draft and finalize evaluation’s research questions

Step 1: Develop a logic model to clarify

the program design

• A logic model is a graphic “snapshot” of how a program

works (its theory of change); it communicates the intended

relationships among program components.

– Inputs, activities, and outputs on the left side of the logic model depict

a program’s processes/implementation

– Changes that are expected to result from these processes are called

outcomes and are depicted on the right side of the logic model

• Research questions should test some aspect of the

program’s theory of change as depicted in a logic model.

Outcomes

INPUTS ACTIVITIES OUTPUTS

Short-Term Medium-Term Long-Term

What we invest What we do Direct products from program Changes in knowledge, Changes in behavior or Meaningful changes,

activities skills, attitudes, action that result from often in their condition

opinions participants’ new or status in life

knowledge

Funding Develop and 500 health and safety Increase in Increase in Improved health

disseminate education materials residents’ residents’ adoption and wellness

4 FT staff accurate, disseminated understanding of of healthy status and quality

accessible, and prevention and self- behaviors and of life for residents

100 actionable health 4 half-day workshop management recommendations in the area

AmeriCorps and safety sessions (at least 20 of conditions of the program

members serve information residents per session; (such as getting

as health care 80 total) Increase in necessary medical

advisors Conduct health residents’ tests)

literacy workshops 100 individual and small motivation to adopt

10 partnerships group health literacy good health

with Provide sessions (60 mins each) practices

community- individualized serving 300 people

based health literacy Increase in

organizations sessions residents ability to

search for and use

Member

health information

training

Example logic model for health literacy

program

Step 2: Define the evaluation’s purpose

and scope

As you define the evaluation’s purpose and scope,

the following questions should be considered:

• Why is the evaluation being done? What information do

stakeholders need or hope to gain from the evaluation?

• What requirements does the evaluation need to fulfill?

• Which components of the program are the strongest

candidates for evaluation?

• How does the evaluation align with the long-term research

agenda for your program?

• What resources (budget, staff, time) are available for the

evaluation?

• Why is the evaluation being done? What information

do stakeholders need or hope to gain from the

evaluation?

– Each evaluation should have a primary purpose

• What requirements does the evaluation need to

fulfill?

– Funders may have specific expectations

• Which components of the program are the strongest

candidates for evaluation?

– You do not need to evaluate your whole program at once

Step 2: Define the evaluation’s purpose

and scope

Step 2: Define the evaluation’s purpose

and scope

• How does the evaluation align with the long-term

research agenda for your program?

– What do you want to know in 5 or 10 years?

• What resources (budget, staff, time) are available for

the evaluation?

– Evaluation’s scope should align with resources

BuildingEvidenceofEffectiveness

PerformanceMeasures

‐ Outputs

ProcessEvaluation

PerformanceMeasures

–Outcomes

Processand/or

OutcomeEvaluation

Identifyandpilot

implementa

programdesign

Attainstrongevidence

ofpositiveprogram

outcomes

Assess

program’s

outcomes

Ensureeffective

full

implementation

Obtainevidence

ofpositive

program

outcomes

Evidence

Informed

Evidence

Based

‐ Gatherevidencethat

supportsprogramdesign

‐ Developlogicmodel

‐ Pilotimplementation

OutcomeEvaluation

ImpactEvaluation

Step 3: Determine type of evaluation:

process or outcome

Process Evaluation Outcome Evaluation

• Goalisgenerallytoinform • Goalistoidentifytheresultsor

changesorimprovementsinthe eff ectsofaprogram

program’soperations

• Measuresprogrambeneficiaries'

• Documentswhattheprogramis changesinknowledge,attitude(s),

doingandtowhatextentand behavior(s)and/orcondition(s)

howconsistentlytheprogram thatresultfromaprogram

hasbeenimplementedas

intended

• Mayincludeacomparisongroup

(impactevaluation)

• Doesnotrequireacomparison

group

• Typicallyrequirequantitativedata

andadvancedstatisticalmethods

• Includesqualitativeand

quantitativedatacollection

Step 4: Draft and finalize evaluation’s

research questions

Research questions are a list of questions to be

answered at the end of the evaluation.

Research questions should be:

• Clear, specific, and well-defined

• Focus on a program or program component

• Measureable by the evaluation

• Aligned with your logic model

Basic principles in designing research

questions

Differences in research questions for process and

outcome evaluations

Changes?

Effects?

Impacts?

Research questions

for process-focused

evaluations ask:

Who?

What?

When?

Where?

Why?

How?

About:

Inputs/resources

Program activities

Outputs

Stakeholder views

Research questions

for outcome-focused

evaluations ask about

:

In:

(Short-term)

Knowledge

Skills

Attitudes

Opinions

(Medium-term)

Behaviors

Actions

(Long-term)

Conditions

Status

Basic principles in designing research

questions for a process evaluation

Research questions for a process evaluation

should:

– Focus on the program or a program component

– Ask who, what, where, when, why, or how?

– Use exploratory verbs, such as report, describe,

discover, seek, or explore

Template for developing general research

questions: process evaluation

[Who, what, where, when, why, how] is the

[program, model, component] for [evaluation

purpose]?

Examples:

• How is the program being implemented?

• How do program beneficiaries describe their program

experiences?

• What resources are being described as needed for

implementing the program?

Examples of research questions for a

process evaluation

Broad to More Specific

Howistheprogram

beingimplemented?

Arestaffimplementingtheprogramwithinthe

sametimeframe?

Arestaffimplementingtheprogramwiththe

sameintendedtargetpopulation?

Whatvariationsinimplementation,ifany,

occurbysite?Whyarevariationsoccurring?

Aretheylikelytoeff ectprogramoutcomes?

Arethereuniquechallengestoimplementing

theprogrambysite?

Broad to More Specific

Howdoprogram

Whatarethebenefitsforprogram

beneficiaries?

beneficiariesdescribe

theirprogram

experiences?

Arethereanyunintendedconsequences

programparticipation?

of

Whatresourcesare

beingdescribedas

neededfor

implementingthe

Whatrecommendationsdoprogramstaffoffer

forfutureprogramimplementers?

program?

Examples of research questions for a

process evaluation

Research Questions Checklist

Exercise #1: Assessing potential research

questions for a process evaluation

General research question: Is the program being

implemented as intended?

Assess whether each of the following is a good

sub-question for the process evaluation:

• Are all AmeriCorps members engaged in delivering health

literacy activities?

• To what extent are AmeriCorps members receiving the

required training and supervision?

• Are program participants more likely to adopt preventive

health practices than non-participants?

• To what extent are community partners faithfully

replicating the program in other states?

Exercise #1: Suggested answers

• Are all AmeriCorps members engaged in delivering health literacy

activities?

– Too vague

– Better: To what extent are AmeriCorps members consistently implementing

the program with the same target population across all sites?

• To what extent are AmeriCorps members receiving the required

training and supervision?

– Good question, assuming required training and supervision are defined

• Are program participants more likely to adopt preventive health

practices than non-participants?

– This is not appropriate for a process evaluation

• To what extent are community partners faithfully replicating the

program in other states?

– Not aligned with program logic model

– Better: What variations in community partners’ participation, if any, occur

by site?

Basic principles in designing research

questions for an outcome evaluation

Research questions for an outcome evaluation

should:

• Be direct and specific as to the theory or assumption

being tested (i.e., program effectiveness or impact)

• Examine changes, effects, or impacts

• Specify the outcome(s) to be measured

Template for developing research

questions: outcome evaluation

Did [model, program, program component]

have a [change, effect] on [outcome(s)] for

[individuals, groups, or organizations]?

Examples:

Did program beneficiaries change their (knowledge,

attitude, behavior, or condition) after program

completion?

Did all types of program beneficiaries benefit from the

program or only specific subgroups?

Template for developing research

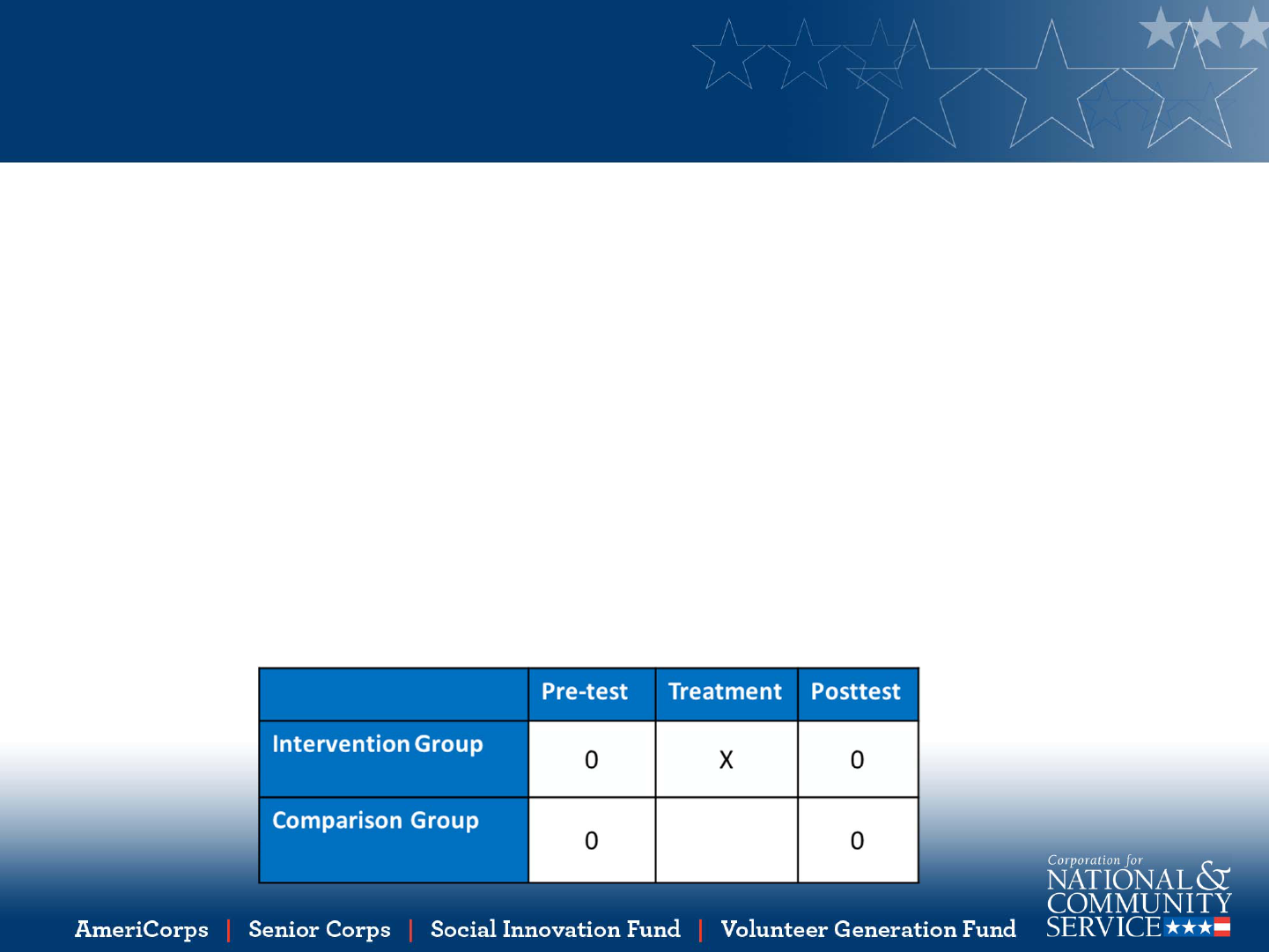

questions: impact evaluation

Did [model, program, program component]

have an [impact] on [outcome(s)] for

[individuals, groups, or organizations] relative

to a comparison group?

Example:

Are there differences in outcomes for program

participants compared to those not in the program?

Exercise #2: Developing research questions

for an outcome or impact evaluation

For this exercise, use the program’s logic model to

identify which outcome(s) to include in the

evaluation.

Consider the following:

• Which outcome(s) can be achieved within the timeframe

of the evaluation (covering at least one year of program

activities)?

• Which outcomes are feasible to measure?

• What data are already available?

Exercise #2: Developing research questions

for an outcome or impact evaluation

Outcome evaluation:

Did [model, program, program component]

have a [change, effect] on [outcome(s)] for

[individuals, groups, or organizations]?

Impact evaluation:

Did [model, program, program component]

have an [impact] on [outcome(s)] for

[individuals, groups, or organizations] relative

to a comparison group?

Research Questions Checklist

Exercise #2: Suggested answers

For outcome evaluations that do not include a comparison group:

• Did program participants increase their understanding of prevention after program

completion?

• Did program participants feel more confident in the self-management of their pre-existing

conditions after program completion?

• Did program participants improve their skills in searching for and using health information

after program completion?

• Were program participants more likely to search for and use health information on their

own after program completion?

For impact evaluations that include a comparison group:

• Are program participants more likely to adopt healthy behaviors compared to similar

individuals who did not participate in the program?

• Are program participants more likely to obtain medical tests and procedures compared to

similar individuals who did not participate in the program?

• Does the impact of the program vary by program participants’ age, gender, or pre-existing

medical condition?

Step 4: Draft and finalize evaluation’s

research questions

Consider the following:

• Do the research question(s) fit with the goals for the

evaluation?

• Do the research question(s) align with the program’s logic

model and the components of the program that will be

evaluated?

• Are these questions aligned with your funder's requirements?

• What kinds of constraints (costs, time, personnel, etc.) are

likely to be encountered in addressing these research

question(s)?

• Do the research questions fit into the program’s long-term

research agenda?

Important points to remember

• Research questions are the keystone in an evaluation

from which all other activities evolve

• Research questions vary depending on whether you will

conduct a process vs an outcome evaluation

• Prior to developing research questions, define the

evaluation’s purpose and scope and decide the type of

evaluation design – process or outcome.

• Research questions should be clear, specific, and well-

defined

• Research questions should be developed in

consideration of your long-term research agenda

Resources

• CNCS’s Knowledge Network

– http://www.nationalservice.gov/resources/americorps/evaluation-

resources-americorps-state-national-grantees

• The American Evaluation Association

– http://www.eval.org

• The Evaluation Center

– http://www.wmich.edu/evalctr/

• The Community Tool Box

– http://ctb.ku.edu/en/table-of-contents/evaluate/evaluate-

community-interventions/choose-evaluation-questions/main

• Choosing the Right Research Questions

– http://www.wcasa.org/file_open.php?id=1045

Questions and Answers